Meta is finally extending its Teen Accounts to Facebook and Messenger, and I think it’s a huge step in the right direction. After initially rolling out this feature on Instagram last year, the company is now making it easier for teens to engage with social media safely.

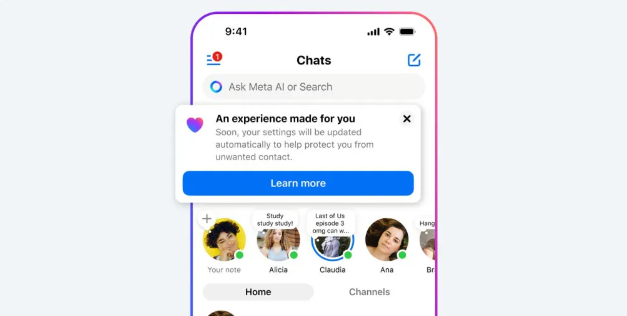

Image:GoogleTeen Accounts are a curated experience for users under the age of 16, and they’re packed with default safety measures. When young users join Facebook or Messenger in the U.S., U.K., Australia, or Canada, they’re automatically enrolled in a version of the app designed with built-in protections.

I’ve been following Meta’s safety initiatives for teens for a while, and this one stands out because it makes safety the default—not the option. Parents also gain more control, as teens will need permission to change important settings.

Key Features of Teen Accounts on Facebook and Messenger

Meta didn’t reveal every single detail in the blog post, but I got more insights from the company’s email to TechCrunch. Here’s what I found out:

- Teens can only receive messages from people they already follow or have communicated with before.

- Only friends can see and reply to a teen's story.

- Mentions, comments, and tags are restricted to people on their friends list.

- Teens will receive reminders to log off after using the apps for an hour each day.

- They’re also placed in Quiet Mode overnight by default.

I personally think that last point is super important—overnight usage is a big contributor to sleep loss among teens, and Meta is finally stepping in.

Instagram’s New Restrictions for Teens

Meta is also updating Teen Accounts on Instagram with new rules. I was glad to see they’re addressing live streaming and DMs, which have been problematic areas in the past.

Here are the new Instagram rules for users under 16:

- Teens cannot go live unless a parent gives permission.

- Parental consent is required to disable image blurring for suspected nudity in DMs.

This means even more control over content that could be inappropriate or dangerous, and as a parent or a tech enthusiast, that’s reassuring.

Meta Responds to Teen Safety Concerns

The U.S. Surgeon General and lawmakers across several states have raised concerns about teen mental health and social media. As someone who writes about tech regularly, I’ve noticed a shift—platforms can no longer ignore their role in teen well-being.

Meta’s move seems to be a direct response to these concerns. According to Meta, over 54 million teens have already been moved into Teen Accounts on Instagram, and 97% of users aged 13-15 have kept their protections turned on. That’s a strong adoption rate and a promising sign that the system is working.

What Parents Think About Teen Accounts

Meta even backed up its move with a survey conducted by Ipsos. As someone who values data-driven decisions, I found these stats impressive:

- 94% of parents said Teen Accounts help them better support their child’s social media experience.

- 85% felt it made managing their teen's Instagram use easier.

Those numbers tell me that Meta’s strategy is not just performative—it’s actually resonating with families.

Meta’s decision to bring Teen Accounts to Facebook and Messenger is not just smart—it’s necessary. As someone who covers tech, I see daily how social platforms shape teen behavior and well-being. This move could set a new standard for how platforms protect younger users.

More importantly, it’s not just about filters and reminders. It’s about changing the culture of online interaction for teens and making platforms safer by default.

If you're a parent, content creator, or someone who cares about tech and teen safety, keep an eye on how this rollout evolves globally. Meta says more regions are coming soon—and hopefully, more platforms will follow this lead.

Post a Comment